AI Data Centers: Energy Consumption and Impact on Texas

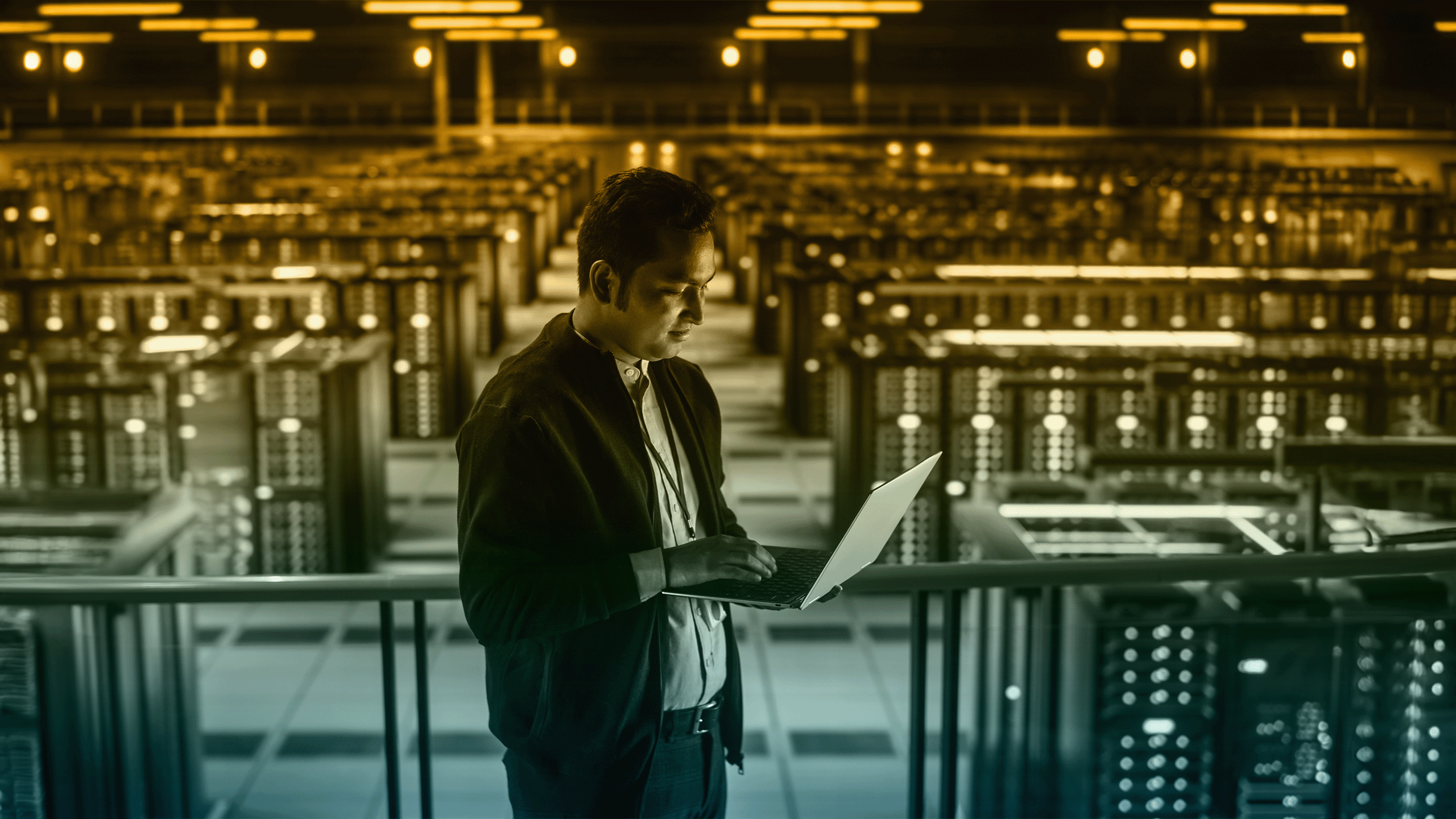

Artificial intelligence (AI) is reshaping industries and redefining what’s possible. At the heart of this AI revolution are the powerhouses that fuel its growth: AI data centers. These specialized facilities are the backbone of the AI industry, providing the immense computing power necessary to train and run sophisticated AI servers and systems, particularly the generative AI models, such as ChatGPT, which have captured public attention in recent years.

As AI demand increases, so does the demand for these data centers bringing with it unprecedented challenges in energy consumption and infrastructure management. This is particularly evident in Texas, a state that has become a hotbed for tech companies and their new data centers.

In this article, we’ll delve into the world of AI data centers, exploring their energy needs, their impact on Texas’s power grid, and the strategies being developed to balance technological progress with environmental responsibility.

What Is an AI Data Center?

An AI data center is a specialized facility designed to host the hardware and software systems required for training, developing, and running AI models. Unlike traditional data centers that primarily focus on storage and basic computational tasks, AI data centers are equipped with high-performance computing resources optimized for the complex calculations and massive data processing demands of AI workloads.

At the core of these facilities are powerful GPUs (graphics processing units), often supplied by companies like NVIDIA, which are particularly adept at handling the parallel processing tasks that AI algorithms require. These GPUs work in tandem with high-speed connectivity, advanced cooling systems, and specialized software to create an environment where AI can thrive.

Large language models (LLMs), a subset of generative AI models, have gained significant attention for their ability to understand and generate human-like text. These models, such as GPT-3 and its successors, require enormous amounts of computing power both for machine learning and inference (the process of using the trained model to generate outputs).

The infrastructure to support LLMs includes:

- Massive GPU clusters. Hundreds or thousands of GPUs can work in parallel to process vast amounts of data.

- High-bandwidth networks. These ensure rapid data transfer between computing units.

- Extensive storage systems. These house the enormous datasets used for training and the models themselves.

- Advanced cooling solutions. Examples include liquid cooling systems to manage the heat generated by intense computation.

This represents a significant leap forward in traditional data center infrastructure, reflecting the unique demands of AI workloads.

Energy Consumption in AI Data Centers

The power requirements of AI data centers are staggering, far exceeding those of traditional data centers. This increased electricity demand is driven by the nature of AI workloads and the specialized hardware they require, including advanced processors from companies like Intel.

How Much Power Do AI Data Centers Consume?

While exact figures can vary depending on the size and specific use case of a data center, AI facilities generally consume significantly more power than their traditional counterparts. This increased power consumption translates to higher energy costs and greater demands on local power grids.

Factors Driving High Energy Consumption

Several factors contribute to the high energy demands of AI data centers:

- Intensive computational requirements. AI models, especially during the training phase, require massive amounts of calculations. This translates directly to high energy consumption by the GPUs and CPUs performing these calculations.

- 24/7 operation. Many AI workloads run continuously, meaning the data centers must operate at high capacity around the clock.

- Cooling needs. The heat generated by high-performance computing equipment necessitates advanced cooling systems, which themselves consume significant amounts of energy.

- Data transfer and storage. Moving and storing the vast amounts of data required for AI training and inference also contributes to energy consumption.

- Redundancy systems. To ensure uninterrupted operation, AI data centers often have redundant power and cooling systems, further increasing overall energy use.

As the demand for AI applications grows, addressing these energy consumption challenges becomes increasingly critical.

Impact on Texas’s Energy Grid

Texas has become a major hub for the data center market, including those focused on AI. This concentration of high-energy facilities significantly impacts the state’s power infrastructure.

AI Data Centers in Texas

Texas has attracted numerous tech giants and AI-focused companies to establish AI systems and data centers within its borders. Some notable examples include:

- Microsoft’s expansion of its data center presence in San Antonio

- Google’s data center in Midlothian

These facilities, along with many others, contribute to Texas’s growing reputation as a tech hub. However, they also place increasing demands on the state’s energy resources, raising concerns about potential outages during peak usage times.

Texas Energy Consumption and Challenges

The proliferation of AI data centers in Texas contributes to several energy-related challenges:

- Increased demand. The high energy consumption of AI data centers adds a significant load to the Texas power grid, particularly during peak usage times.

- Grid stability. The constant, high-level power draw of these facilities can strain grid infrastructure, potentially leading to stability issues.

- Energy mix considerations. Texas’s energy mix, which includes a growing proportion of renewable energy sources, must be balanced to meet AI data centers’ consistently high energy demands.

- Local impact. Communities hosting these facilities may experience changes in their local power dynamics and pricing.

- Seasonal challenges. Texas’s extreme weather events, such as summer heat waves or winter storms, can exacerbate the challenges of meeting high energy demands while maintaining grid stability.

Addressing these challenges requires cooperation between data center operators, energy providers, and state regulators to ensure a reliable and sustainable power supply.

Future Trends and Predictions

AI infrastructure and data centers are evolving rapidly, driven by advancements in AI technology and growing awareness of energy concerns. Several key trends are shaping the future of this industry.

Growth of AI Data Centers

The demand for AI applications and services is projected to grow exponentially in the coming years. This growth is driven by increasing adoption across industries, from healthcare and finance to manufacturing and retail. As AI becomes more sophisticated and accessible, its applications are expanding rapidly, fueling the need for more computational power.

This growth will drive a corresponding increase in AI data center capacity and likely lead to even greater energy demands, making efficiency improvements important. The scale of this expansion is significant, with some industry analysts predicting a doubling or even tripling of AI-specific data center capacity within the next five years. This surge in infrastructure is necessary to support the training and deployment of increasingly complex AI models, which require massive amounts of data processing and storage.

Innovations in Energy Efficiency

Several innovative approaches are being developed to address the energy challenges posed by AI data centers:

- Advanced cooling technologies. New cooling methods, such as immersion cooling and advanced liquid cooling systems, promise to reduce the energy needed for thermal management.

- AI-optimized hardware. Next-generation GPUs and specialized AI chips are being designed with energy efficiency in mind, potentially offering more computations per watt.

- Software optimizations. Improvements in AI algorithms and model architectures could reduce the computational resources required for training and inference.

- Edge computing. Pushing some AI workloads to edge devices could reduce the load on centralized data centers.

- Renewable energy integration. Many data center operators are investing in or partnering with renewable energy projects to offset their carbon footprint.

These innovations aim to balance the growing demand for AI computing power with the need for sustainable operations.

Strategies To Reduce Energy Usage

As the energy consumption of AI data centers continues to be a concern, the following strategies are being implemented to reduce their power usage and environmental impact.

Efficient Equipment

Choosing and optimizing equipment for energy efficiency is a key strategy:

- High-efficiency servers. Selecting servers and GPUs designed for optimal performance per watt.

- Dynamic power management. Implementing systems that can adjust power usage based on workload demands.

- Modular data center design. Creating flexible layouts that can be optimized for cooling and power distribution.

Power Supply Improvements

Improving the efficiency of power delivery and management systems can significantly reduce overall energy consumption:

- High-efficiency power supplies. Using power supplies with 80 Plus Titanium or better certification.

- DC power distribution. Some facilities are exploring DC power distribution to reduce conversion losses.

- Energy storage systems. Implementing battery or other energy storage solutions to manage peak loads and integrate renewable energy sources.

Cooling Innovations

Cooling remains a major consumer of energy in data centers. Innovative cooling strategies include:

- Free cooling. This refers to using outside air for cooling when environmental conditions permit.

- Liquid cooling. There are various forms of liquid cooling, from rear-door heat exchangers to direct-to-chip solutions.

- AI-driven cooling management. AI can optimize cooling system operations in real time.

AI Data Centers’ Environmental Impacts and Solutions

The carbon footprint of AI data centers is significant, primarily due to their energy consumption. However, the exact impact depends on several factors:

- Energy source. The carbon intensity of the local energy grid can vary in Texas, where the energy mix includes both fossil fuels and renewables.

- Operational efficiency More efficient data centers have a lower carbon footprint.

- Lifecycle considerations. The way data center equipment is produced and disposed of also contributes to the overall environmental impact.

Data center operators and AI companies are implementing various strategies to reduce their environmental impact:

- Renewable energy adoption. Many companies are investing in or purchasing renewable energy to power their operations.

- Carbon offsetting. Some operators are investing in carbon offset projects to balance their emissions.

- Circular economy practices. These may include recycling and reuse programs for data center equipment.

- Water conservation. In regions like Texas, where water scarcity can be an issue, water-efficient cooling is important.

- Green building practices. Data centers can be constructed with sustainable materials and designs.

By implementing these strategies, the AI industry aims to balance the growing demand for computing power with environmental responsibility.

The Bottom Line on AI and Energy

AI technology isn’t just about the cool new features on your smartphone. The massive data centers powering these innovations are impacting the nation’s energy grid, especially in Texas. These facilities are like electricity-hungry giants, and their appetite is only growing.

So, before you ask your AI assistant to write your next essay or generate an image, remember that those convenient services have a real-world energy cost.

Ready to take control of your own energy usage in this AI-driven world? Consider a prepaid electricity plan with Payless Power. It’s a smart way to manage your energy consumption and costs, even as the big tech companies figure out how to make their data centers more efficient.

Stay informed, make conscious choices, and let’s work together to keep Texas powered up responsibly in this exciting age of AI.

What our customers are saying

See why our power customers say we're the best electricity provider in Texas!

I was worried about getting electricity for my home through a prepaid company. I was calling around to see different rates then going through all the hassle of credit checks while dropping points each…

I have been with this company for several years and have been very happy since. Even when I moved, they made my usually stressful situation very easy and carefree. I recommend them to everyone that I…

I have enjoyed the service for 2 years now. In the beginning this service was planned to be temporary but with the service being so effective for me i decided to keep it for the long haul. I’m a happy customer.